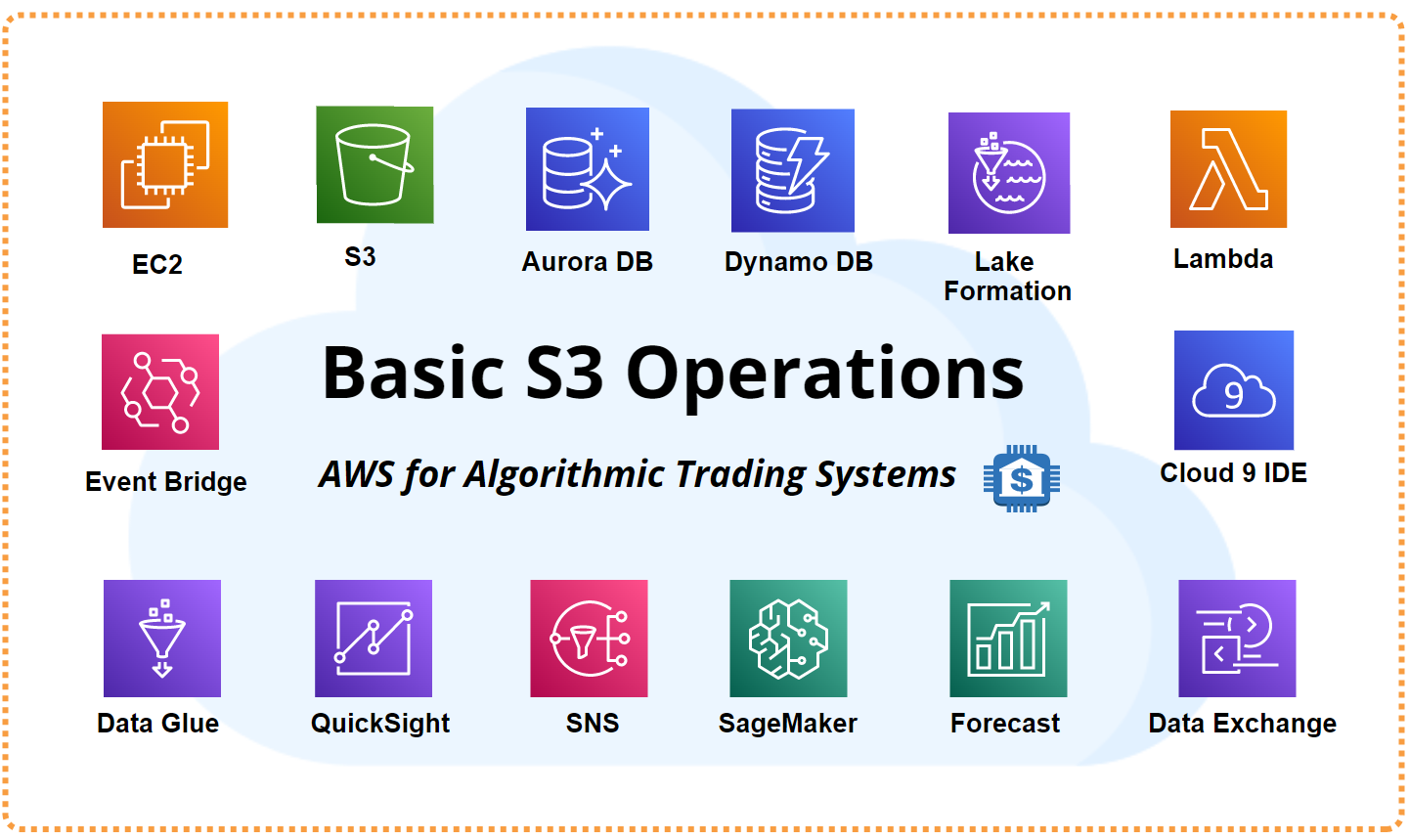

Building an AWS Trading System - Basic S3 Operations (Part 4) (Python Tutorial)

This post is part of a series of tutorials with the subject of building a scalable algorithmic trading system on the Amazon Web Services (AWS) cloud platform. Bear with me, as I’m learning AWS myself.

The advantage of using AWS is that it offers dozens of powerful, integrated software services that can be used to build a scalable, high-performance, low-cost production trading system.

In this post we are going to over basic operations using the AWS file storage service S3. This service can be used for example to store price or other data files in the cloud before importing them into a cloud database.

This story is solely for general information purposes, and should not be relied upon for trading recommendations or financial advice. Source code and information is provided for educational purposes only, and should not be relied upon to make an investment decision. Please review my full cautionary guidance before continuing.

Previous Tutorials

The following posts are suggested reading for this post to get you started:

Building an AWS Trading System - Registration & Environment Setup (Part 2)

Building an AWS Trading System - Basic EC2 Operations (Part 3)

Trade Ideas provides AI stock suggestions, AI alerts, scanning, automated trading, real-time stock market data, charting, educational resources, and more. Get a 15% discount with promo code ‘BOTRADING15’.

What is S3?

Amazon Simple Storage Service (S3) is a service for cloud file and object storage in the cloud. The advantages of using S3 are that it is scalable, cost effective and has a high degree of availability. Data can be organized in virtual file structures called ‘buckets’.

S3 can be used as part of an automated trading system to store price data files, news or social media data, log files, analytics files, charts and screenshots, backtest results or any other type of raw file data in the cloud. Other services such as AWS Glue or Lambda functions can be use to process, transform and aggregate the data.

Read more about AWS S3 here.

What is AWS Cloud 9?

AWS Cloud 9 is an Integrated Development Environment (IDE) that is accessed through a browser and can be used to write and debug source code using languages like JavaScript or Python.

In this series of tutorials we will be using AWS Cloud 9 IDE to write, execute and store all our code.

In order to be able to use Cloud 9, you will need to set up an EC2 instance that can be used to run Cloud 9.

Read more about Cloud 9 here.

What is Boto3?

AWS functionality can be accessed in different ways, e.g. AWS Console, AWS Command-line Interface (CLI), or different SDKs. In this series of tutorials we will be using the Boto3 library to interact with the different AWS services.

Boto3 is a Python SDK provided by Amazon to interface with services such as EC2, S3, Amazon Athena, etc. For the S3 service for example, Boto3 provides a set of APIs to create and delete buckets and upload/download files to and from a bucket.

Read more about Boto3 here.

Here the boto3 AWS API documentation.

Tutorial Outline

In this tutorial we will go over these basic S3 operations:

Create a bucket

List all buckets

Upload a price data file to S3

Download the price data file from S3 and store it

Delete the bucket.